Will make you grasp easily Artificial Intelligence (AI)

You may have heard or come across about AI, or Artificial Intelligence, from others in your daily life. It has become the buzzword in today’s rapidly evolving technology field. But do you want to know what AI truly means? What is the history of AI? Why is AI so essential at the current time? How today are we using AI? What will be the future of AI?

Let’s explore this post to answer your questions.

Table of contents:

Highlights:

- Artificial Intelligence is the term used to describe the intelligence displayed by machines and software programs.

- It is the imitation of natural intelligence by devices and systems, which are programmed to pick up on and imitate human behavior.

- In the modern world, artificial intelligence has become extremely popular.

- These machines can learn from experience and carry out jobs that humans would do.

- As they develop, AI and other emerging technologies will significantly affect our quality of life.

What is Artificial Intelligence (AI)?

Technology has been advancing rapidly in the modern world, and we are constantly coming into direct contact with these technological inventions.

Artificial Intelligence is one of the dynamically developing fields of computer science that has projected to usher in a new era of technological innovation through the development of advanced machines.

Artificial intelligence is indeed deeply ingrained in our world. It is presently engaged in various subfields, from the generic to the specialized, incorporating self-driving vehicles, theorem proving, playing board games, generating artwork, language translations, music performance, etc.

One of the fascinating and all-encompassing areas of computer science with a prosperous future is Artificial Intelligence. AI does indeed have the potential to enable a machine to process data like a human.

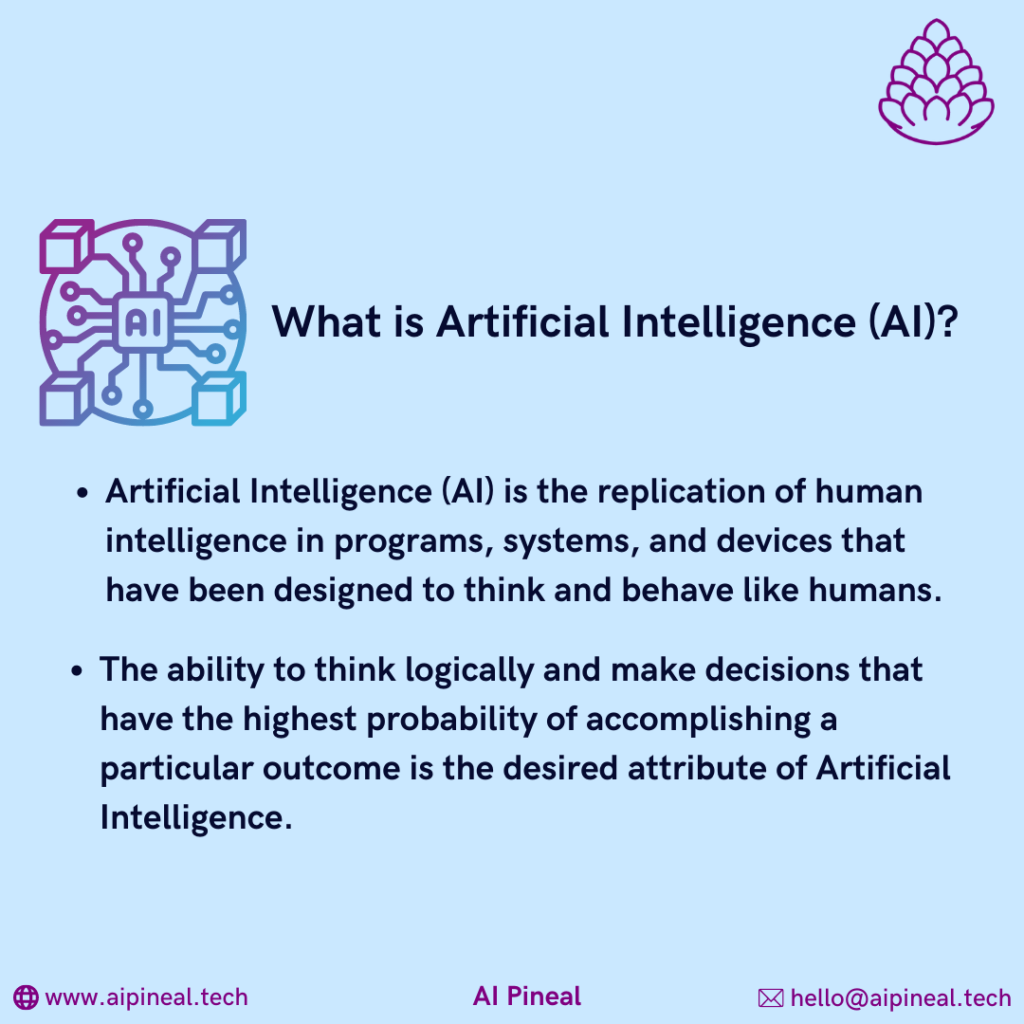

Definition of artificial intelligence (AI):

According to Britannica, “Artificial Intelligence (AI) is the ability of a digital computer or computer-controlled robot to perform tasks commonly associated with intelligent beings.”

Artificial Intelligence (AI) is the replication of human intelligence in programs, systems, and devices that have been designed to think and behave like humans.

The concept could also be used to describe any computer that reinforces characteristics of human intelligence, like understanding and problem-solving.

In other words, artificial intelligence (AI) is the potential of a computer or machine controlled by a computer to carry out activities usually accomplished by thinking creatures.

The ability to think logically and make decisions that have the highest probability of accomplishing a particular outcome is the desired attribute of Artificial Intelligence.

Machine learning (ML), a subfield of artificial intelligence, is the concept that software programs can recognize patterns from and adapt to changing data without human involvement.

Deep learning algorithms permit this independent learning by collecting massive amounts of unstructured information, including text, pictures, and videos.

Even though no AI can achieve the vast multitude of tasks that a typical individual can, some AIs can compete with people in only specific jobs.

The History of AI :

The first sophisticated robots and artificial beings appeared in Greek mythology. Aristotle’s conception of syllogism, including the use of the deductive approach, marked a huge turning point in humankind’s quest to discover its understanding. Even though it has deep historical foundations, modern artificial intelligence has been around for about a century.

Let’s review the meaningful chronology of advancements in artificial intelligence:

1943: The very first work on artificial intelligence (AI), “A Logical Calculus of Ideas Immanent in Nervous Activity,” was presented in 1943 by Warren McCulloch and Walter Pits. They suggested a framework of synthesized neurons.

1949: In 1949, Donald Hebb authored “The Organization of Behavior: A Neuropsychological Theory” which included a hypothesis for customizing the resilience of interconnected neurons.

1950: In his 1950 publication “Computing Machinery and Intelligence,” English mathematician Alan Turing presented a test to measure whether a machine is capable of replicating human behavior. The Turing Test is a very well-known name for this evaluation.

The first artificial neural network computer, designated as “SNARC” was constructed in the same year by Harvard graduates Dean Edmonds and Marvin Minsky.

1956: Allen Newell and Herbert A. Simon developed “Logic Theorist,” the “first artificial intelligence program,” in 1956. 38 out of 52 mathematical hypotheses were validated by this system and it also uncovered novel, useful interpretations for several of them.

John McCarthy, an American scientist, came up with the term “Artificial Intelligence” for the first time as an academic discipline at the Dartmouth Conference the same year.

1959: While employed by IBM in 1959, Arthur Samuel invented the term-“machine learning.”

1963: John McCarthy at Stanford established an Artificial Intelligence Lab in 1963.

1966: Joseph Weizenbaum developed ELIZA, the first chatbot, in 1966.

Uneven future

1972: In 1972, Japan constructed WABOT-1, the first humanoid robot.

1974 to 1980: During this time window, which is considered the very first AI winter period, numerous researchers were unable to conduct their investigations to the best capacity, given the lack of government financing and a substantial reduction in public interest in AI.

1980: 1980 saw the return of AI. The AI winter formally came to an abrupt end when Digital Equipment Corporation produced R1, the first commercially successful expert system.

The American Association of Artificial Intelligence had its first national conference that year at Stanford University.

1987 to 1993: The second AI winter period came about as a result of investors and the government discontinuing financing for AI research due to new computer technology and less affordable alternatives.

1997: A machine defeats a person! Gary Kasparov, the reigning world chess champion, was beaten by IBM’s computer IBM Deep Blue, making it the first computer or program to do so.

21st century AI:

2002: Vacuum cleaners were launched in 2002, allowing AI to penetrate households.

2005: Onset in 2005, the American military made significant investments in autonomous robots like iRobot’s “PackBot” and Boston Dynamics’ “Big Dog.”

2006: Companies including Google, Facebook, Twitter, and Netflix began utilizing AI around 2006.

2008: In 2008, Google made strides in the domain of speech recognition.

2011: Watson, an IBM computer, excelled in the game show Jeopardy, where competitors had to solve challenging questions and puzzles. Watson has shown that it can quickly and efficiently understand simple language and solve many issues.

2012: In 2012, Andrew Ng, who pioneered Google Brain Deep Learning research, ingested 10 million YouTube videos employing deep learning models into a neural network. A major shift in deep learning and artificial neural networks commenced when the neural network discovered how to distinguish a cat without even being instructed what a cat is.

2014: Google introduced the very first self-driving vehicle in 2014, and it passed the road test.

Alexa from Amazon also debuted in 2014.

2016: Sophia, a humanoid robot developed by Hanson Robotics that is skilled at facial recognition, vocal communication, and facial emotion, was the first “robot citizen” constructed in 2016.

2020 – Baidu provided their LinearFold AI algorithm accessible to scientific and medical teams looking to develop a vaccine during the preliminary stages of the SARS-CoV-2 epidemic. The methodology was 120 times considerably faster than earlier techniques at determining the RNA sequence of the virus in just 27 seconds.

2021: Facebook devised an AI model named Compositional Perturbation Autoencoder (CPA) that identified medical variations to treat difficult-to-treat disorders.

Artificial intelligence is experiencing exponential growth in all sectors as each day goes by. AI is already here; it is no longer in the future.

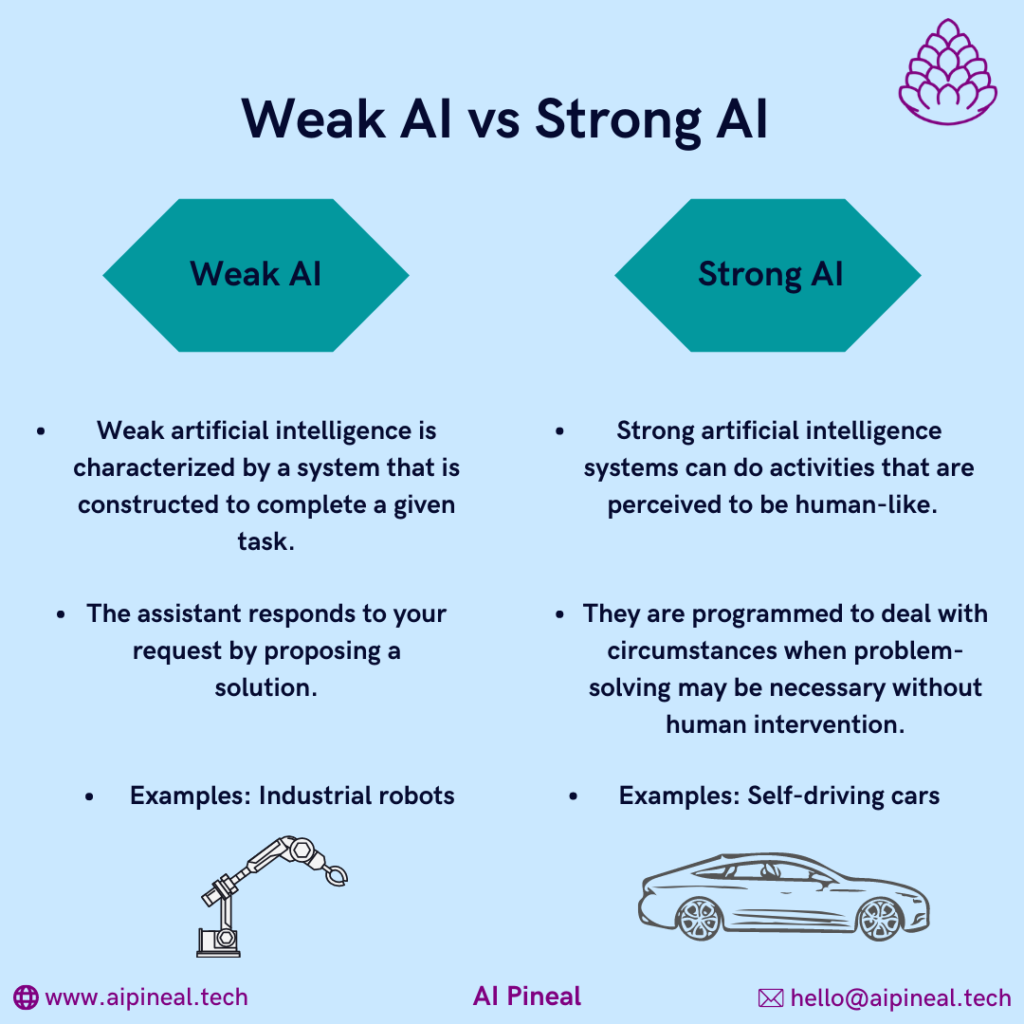

What is weak AI?

Weak artificial intelligence is characterized by a system that is constructed to complete a given task. Computer games like Chess and personal assistants like Apple’s “Siri” and Amazon’s “Alexa” are examples of weak AI systems. The assistant responds to your request by proposing a solution.

Industrial robots are another example of weak AI, also known as narrow AI.

What is strong AI?

Strong artificial intelligence systems can do activities that are perceived to be human-like. These tend to be more intricate and complex systems. They are programmed to deal with circumstances when problem-solving may be necessary without human intervention. A strong AI system can deploy fuzzy logic to transfer knowledge from one domain to another when faced with an unexpected task and come up with a solution on its own.

This kind of technology is useful in applications like operating rooms in medical facilities and self-driving automobiles.

Types of artificial intelligence?

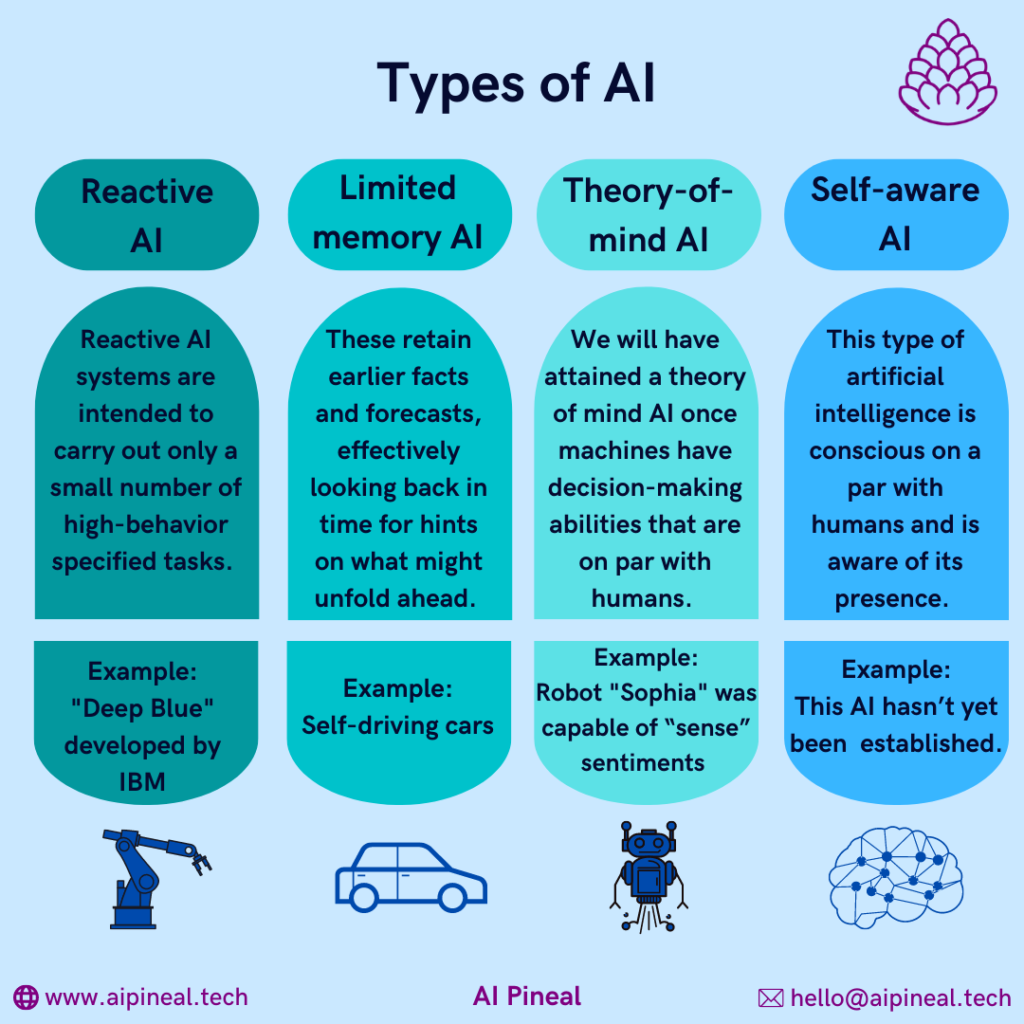

Artificial Intelligence can primarily be categorized into one of four types.

1. Reactive artificial intelligence:

The most fundamental AI principles are followed by a reactive AI, which, as its name suggests, is only able to use its intelligence to perceive and respond to the environment in front of it. Because reactive AI lacks memory storage, they are unable to use prior experiences to guide current decisions.

Reactive AI systems are intended to carry out only a small number of high behavior-specified tasks because they can immediately perceive the world. However, intentionally limiting the scope of a reactive machine’s worldview means that this kind of AI will be more dependable and trustworthy—it will respond consistently to the same stimuli.

Reactive machine artificial intelligence can achieve only a level of complexity and offer dependability when developed to carry out recurring activities, despite its constrained scope and difficulty in modification.

Examples: “Deep Blue” developed by IBM, and “AlphaGo” designed by Google, are prime examples of game-playing reactive AI.

2. Limited memory artificial intelligence:

When collecting information and assessing alternatives, limited memory AI can retain earlier facts and forecasts, effectively looking back in time for hints on what might unfold ahead. Reactive machines lack the complexity and capability that limited memory AI offers.

An AI environment is designed so that models can automatically be taught and refreshed, or limited memory AI is created when a team continuously keeps teaching a model how to comprehend and use additional information.

Six actions should be taken when implementing limited memory AI in machine learning:

i. Data should be created for training.

ii. The machine learning model must be developed.

iii. The model should be capable of providing forecasts.

iv. The model should be able to receive feedback from humans or the environment.

v. The model should then be capable of holding that feedback as knowledge.

vi. Most of these phases must be repeated in a cycle.

Examples: Limited memory AI is applied in self-driving cars.

3. Theory-of-mind artificial intelligence:

Theory-of-mind AI is dynamic and has a spectrum of learning and memory characteristics.

We will have attained a theory of mind AI once machines have decision-making abilities that are on par with humans. The future of AI relies on this. The capability of robots to grasp, recall, and modify behavior in response to emotions, just as people can in interpersonal interaction, is an essential feature of this AI.

To replicate how smooth this mechanism is in human interaction, systems would need to quickly alter their behavior based on emotions, which would be one barrier on the pathway to the theory of mind AI.

However, if this achievement is successful, it may pave the way for robots to engage in everyday responsibilities, such as offering company to humans.

Examples: A humanoid robot named “Sophia” was capable of “sense” sentiments and reacting accordingly because of her human-like appearance.

Kismet was able to identify feelings and recognize them through its facial characteristics, including the eyebrows, eyes, ears, and lips.

4. Self-aware artificial intelligence:

As the name suggests, self-aware AI acquires consciousness and self-awareness. The ultimate step for AI will be towards becoming self-aware, is once Theory-of-Mind can be developed in it, which will take a very long time in the years ahead. This type of artificial intelligence is conscious on a par with humans and is aware of its presence and the presence and emotional states of everyone else. It would be capable of comprehending what other people could need based on what they say to them and how they say it.

Self-awareness in artificial intelligence depends on human scientists’ being able to reconstruct consciousness so that it can be implemented into computers.

Example: Since we do not have the components needed or methods, this AI hasn’t yet been effectively established.

Why is artificial intelligence so useful?

AI is essential because, in some circumstances, it can outperform people at activities and provide businesses with previously unknown insights into their operations, especially regarding tedious, meticulous duties.

Artificial intelligence is a fantastic tool since it provides several advantages, including:

Assessment and Precision: Compared to humans, AI assessment is significantly faster and more accurate. AI can leverage its capacity for the interpretation of data to make intelligent choices.

Automation: Without getting tired, AI can automate laborious operations and jobs.

Improvement: AI can successfully strengthen all products and services by enhancing end-user experiences and providing superior specific product recommendations.

Broadly said, AI accelerates the development of products and business activities by assisting businesses in producing quality choices. The biggest and most prosperous businesses today have utilized AI to enhance their operations and outperform rivals.

How today we use AI?

With diverse degrees of complexity, AI is currently used widely in a multitude of scenarios. Popular AI implementations include chatbots which are found on websites or in the area of digital speakers (e.g., Alexa or Siri), and recommendation systems that advise what else you might like next.

AI is applied to automate manufacturing processes, minimize multiple types of repetitive cognitive tasks, and create forecasts for the weather and the economy (e.g., tax accounting or editing).

AI is also deployed for a plethora of other tasks, including language comprehension, driving autonomous cars, and gaming.

Read more to know about AI bots

What is the future of AI?

Humans have always been captivated by technology and science fiction, and in the field of AI, we are currently experiencing the most significant developments in the history of humankind. The realm of technology has identified artificial intelligence as its next big thing. Global organizations are developing ground-breaking, significant advances in machine learning and artificial intelligence.

The future of every sector and every person will be transformed by artificial intelligence, which has also served as the prime motivator for developing technologies like robots, IoT, and big data. It will continue to be a technical pioneer in the forthcoming years based on its pace of growth.

The social environment and quality of life will be most influenced by these AI technologies as they progress.

Subscribe to our newsletter